What exactly are Google Bots?

Most likely, you are already familiar with some of the SEO best practices: structuring the website, labeling rules (or the use of H tags), using keywords (or over-optimizing keywords), creating unique content, etc... then, for sure, you've also heard of Google bots.

However, what do you know about Google bots or crawlers? This phenomenon differs from the well-known SEO optimization, because this process has a much greater depth. While the process of SEO optimization deals with optimizing a text for the search engine, Google Bot optimization is done for Google search robots (also known as Google Spiders, Site Crawlers or Google Bots).

Of course these processes have similarities, but it is necessary to clarify the main differences, as they can influence the position in the top searches of your site. We will talk about the phenomenon of "site crawlability" (more precisely, how easy it is for Google bots to "read" the site). This process is one of the most essential when we refer to the transparency level of the website.

What is Google bot?

Site crawlers or Google bots (in translation, indexing robots) are automatic programs that examine a web page and create an index based on it. Where a web page allows access muzzle(robots), then they have the possibility to add this page to an index, and only in that case, this page becomes accessible to other users of the search engine.

If you want to understand the process of optimizing for Google bot, you must first understand how a Google spider (in translation "Google spider") scans a site. Below are the four steps you need to take to understand exactly how a Google spider is crawling your site. Here's how these four steps should clear this up:

Here we can talk about "crawl budget“, which translates to the exact amount of time bots spend scanning a site. The more authority that page has, the more attention it will receive from Google.

Google bots access a website constantly

Here's what Google says about it: "The Google robot does not need access to the site more than once, in a second." This means that your site is under the constant control of Google's "spiders", if in which you facilitated their access.

Today, many SEO experts debate the so-called "hit rate" ( or "crawl rate”) and try to find an optimal way to access the website to gain more authority. However, here we can only speak of a wrong interpretation, because "crawl rate" means only a processing speed of the Google robot in front of the indexing requests.

You can even change this rate using Google Webster Tools. Number of quality links, uniqueness or originality, mentions in social media, can influence your position in SERP ranking.

It should also be noted that Google bots it doesn't scan every page constantly. That's why posting new and quality content will attract more and more attention from Google's bots. Some pages cannot be scanned, so they become a part of Google Cache. Formally, this is a capture of the site as it was last "read" by Google. So that archived version will count for Google until there is a new indexing where content changes have occurred.

The robots.txt file is the first thing Google bots scan to get a sitemap to be indexed

This means that if a page is marked to be ignored, the robots will not be able to scan and index it.

The XML Sitemap is a guide for Google's bots

XML sitemap (XML map) helps botnets to find out which places on the site should be accessed and indexed. This map helps in the crawling process, as the site may be built in a hierarchy that is difficult for a robot to navigate and scan automatically. A good sitemap can help low authority pages that have few backlinks and lower quality content.

6 strategies to optimize your website for Google

As you understood, optimization for Google spiders or Google crawlers must be done before SEO optimization. So let's see what we can do to make access easier Google bots when indexing the site.

1. Overuse is not effective

It is good to know that, Google bots cannot scan certain frames, Flash player, JavaScript, DHTML, as well as the well-known HTML code.

Moreover, Google has not yet clarified whether Googlebot is capable of accessing Ajax or JavaScript code with its crawlers, so it is better to avoid them in the process of website creation.

Although Matt Cutts (former Google employee who helped optimize the Google bot) states that JavaScript can be "read" and interpreted by "web spiders", Google's best practices guide brings conflicting concepts: "If the cookies of different frameworks, Flash player or JavaScript cannot be seen in a browser as text, then 'web spiders' may not be able to 'read' them on that site either."

As a reference, JavaScript code should not be used very often. Sometimes this is required to see what cookies your site has stored.

2. Don't underestimate your robots.txt file

Have you ever wondered what the purpose of the file is robots.txt? It's a standard file used in many SEO strategies, but is it really useful?

For starters, this file is essential for all search engines (Google, Bing, Yandex, Yahoo, Baidu, etc.). Second, you need to decide which files you want Google to scan. If you have a document or page that you do not want Google bots to access, then this must be specified in robots.txt.

Google will immediately see this way, what is prioritized to be scanned. If you do not indicate a certain element to be avoided from indexing, the Google bot will "read" and automatically display that page in its search engine. So the main function of the robots.txt file is to tell Google's robots where they should not "look".

3. Unique and quality content matters most

The rule is that when a website has new content, it is indexed more frequently; so it will get even more traffic.

Despite the fact that PageRank- determines how often a website will be checked by the Google bot, it may become irrelevant when we analyze the usefulness and freshness of the content compared to pages with low PageRank.

So your main goal is to have pages with less authority crawled more often, as you can gain visitors just as easily as a page with higher authority.

4. Pages that seem endless

If your site has pages that seem to never end, or that load new content with each scroll, this does not mean that the pages cannot be optimized for Google bot. It will be a little more difficult to "teach" the bot what those pages are about, but not impossible. You just have to follow the tips provided by Google for an easier and more efficient indexing.

5. Building internal links is an effective strategy

This is very important if you want to facilitate the scanning process of bots.

If your internal links are interconnected, it will be much easier for bots to "read" your site. A balanced web design is pleasing to both regular users and Google bots.

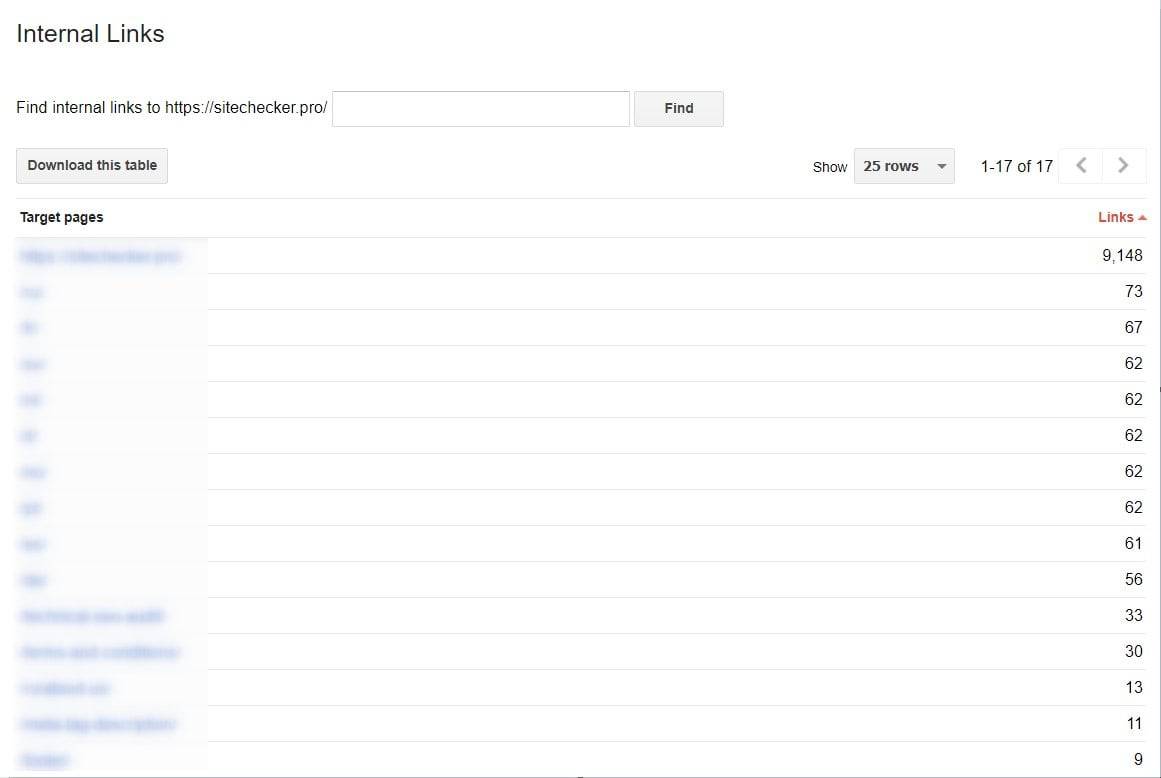

If you want to have an analysis of the internal links built, you can check this in Google Webmaster Tools > Links > Internal Links.

If a page is at the top of the list, then it means that it contains the most internal links.

6. Sitemap.xml is also essential

Sitemap.xml is a file that gives instructions to robots on how to access the site; this is simply a sitemap.

Then why is it used? Because many modern sites are difficult to scan and have many pages that otherwise do not appear in other external references.

The map will make the indexing process a lot easier and won't confuse the bot as to where it should look next to scan the entire site. This file guarantees that all pages on the website are scanned and indexed by Google.

How to analyze Google bot activity?

If you want to see the activity performed by the Google bot on your site, then you can use Google Webmaster Tools.

Moreover, we advise you to check the data provided by this service regularly, because it can alert you if certain problems occur in the scanning process. You just need to check the section "crawl" from your Webmaster Tools panel.

Common scan errors

You can check if your site is encountering errors in the scanning process quite simply. By doing this consistently, you can ensure that you won't have any errors, penalties, unindexed pages or other issues.

Some sites have minor problems in the scanning process, but this does not mean that it will affect their traffic. Errors may appear as a result of recent changes to the website or updates. However, if they are not resolved in time, they will inevitably lead to a decrease in traffic and the top position in the search engine.

Below you can see some examples of errors:

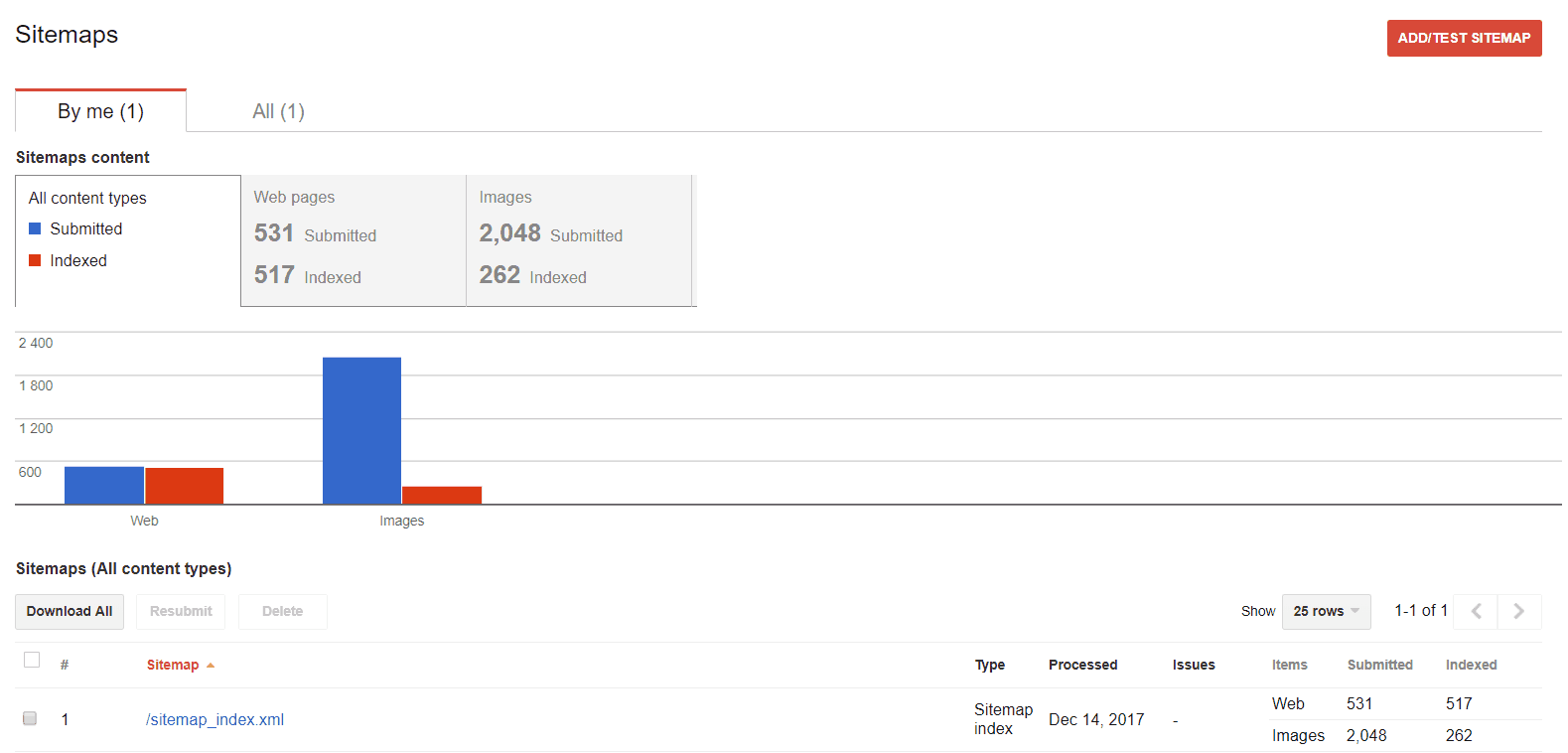

Sitemaps

You can use this feature if you want to make changes to your personal sitemap, review processes and analyze which pages are indexed.

"Fetching"

The section "Fetch as Google” helps you see the website or web page as Google sees it through its robots.

Read/scan statistics

Google can also tell you how much information a "web spider” within a day.

So, if you post new and quality content regularly, you will have positive results in the statistics.

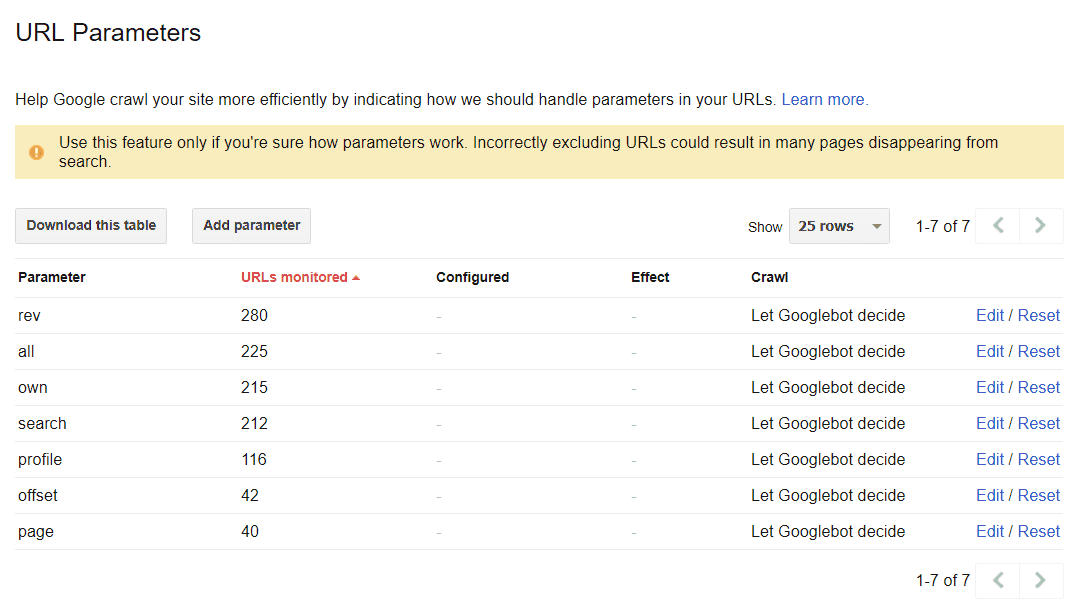

Parameters of a URL site (URL Paremeters)

This section can help you discover one of the ways Google accesses the Internet with its crawlers and how it indexes your website using parameters url.

Finally, all web pages are scanned by Google as standard, based on the decision of its robots. We can only make their decisions more efficient and make sure they "read" everything they needed to.

Photo source: Sitechecker